The following details SagivTech’s experience developing an OpenCL implementation of a bilateral filter on the Samsung Galaxy S4 mobile (I9500) platform, which utilizes Imagination’s PowerVR SGX 544. We demonstrate the various optimizations steps we used to optimize the reference OpenCL code showed on the previous page. The main reason for choosing the Samsung’s Galaxy S4 platform was because it has Imagination’s GPU in it and it was one of the very first mobile platforms to support OpenCL out-of-the-box. Configuring and getting up and running, OpenCL wise, was very fast.

The Imagination platform

The following information and code has been tested on the Samsung’s Galaxy S4 mobile phone.

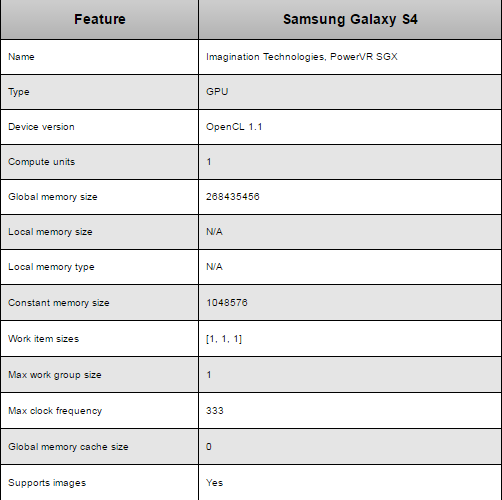

Here’s a short summary of Samsung’s Galaxy S4 OpenCL properties:

OpenCL on the GPU

Benchmark methodology

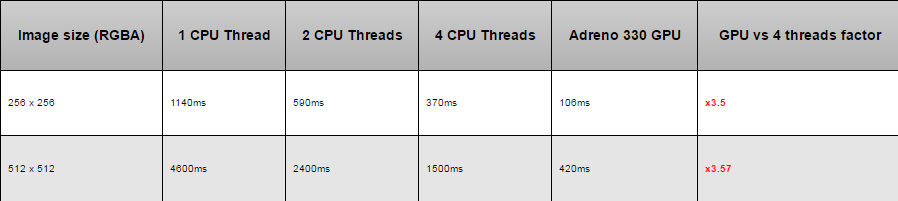

In order to benchmark the code, I’ve used two image sizes:

- 256 x 256

- 512 x 512

Before measuring the real timings of the kernel on the GPU, a warm-up is done. The warm-up will run the kernel on the GPU for 10 runs, but will ignore the timing results. Only then will the kernel be run for 50-100 times in a row and the average time of that test is the one reported in the performance table below. This method tries to remove spikes and initialization going on behind the scenes when measuring times. That being said, the timings in the table below, still fluctuate from run to run, so the values are the average of several runs.

OpenCL code

In order to explore the benefits the Imagination’s PowerVR SGX 544 can expose when used on Samsung’s Galaxy S4 device, I’ve started with a simple, non-optimized kernel. The kernel reads the data from global memory and processes one pixel per work-item. The base reference code for the optimizations described further along was described in the previous section.

A few optimization options come to mind when looking at the basic bilateral kernel code such as accessing the input data using textures instead of fetching it from global memory, reducing memory footprint by first reading data from global memory to local memory, loop unrolling, etc. Unfortunately some of those optimizations are not currently available on Imagination’s current hardware and OpenCL implementation.

As can be seen in the above table, current hardware does not support local memory. This limitation means that current hardware only support work groups of (1 x 1 x 1) size and therefore can not share data between themselves (as there is only one workitem per workgroup). This limitation prevents the code from reusing data across work items and possibly making the algorithm run faster.

Next optimization step would be to have the code use images instead of global memory. As you recall from the base kernel reference in the previous section, the bilateral filter makes use of two nested loops to get the values of the current pixel’s neighbours. It seems, though, that a current requirement is to have all sample locations known at compile time for the compiler to take advantage of images. Since the access to the neighbouring pixels is done inside the nested for loops, those locations are not known at compile time, hence the possible performance gain from using images can not be achieved.

A possible solution to that issue would be to build the kernel code on-the-fly and manually unroll the loops in such a way that those locations would be known at compile time. Since the #pragma unroll directive is also not supported and the filter size, for a real world application, would be changed dynamically this turns out to be quite a tedious task. I did not try to test if this solution would yield better performance.

Performance summary

The following table summarizes the best performance timings measured on both the CPU and GPU, such that you can see the great benefits the GPU brings to the table. In order to get the most accurate timing measurement, we averaged the GPU timings over 50 runs. Also, running 8 CPU native threads, instead of 4, didn’t yield better performance, as one could expect.

Conclusion

As can be seen by the benchmarks, the bilateral filter running on the GPU is approximately 3.5 times faster than the same filter running on the CPU using 4 threads. Now that the bilateral filter, or any other compute intensive task, is running on the GPU, the CPU can be more responsive to other tasks at hand, while the heavy compute tasks are being handled by the GPU faster, improving overall system performance and efficiency.

We are looking forward to try and test OpenCL on Imagination next generation, Rogue. Rogue, will hopefully introduce more raw power and features that will boost the current performance figures that we were able to get out of Imagination’s current hardware generation.

This project is partially funded by the European Union under thw 7th Research Framework, programme FET-Open SME, Grant agreement no. 309169

Written by: Eyal Hirsch, GPU Computing Expert, Mobile GPU Leader, SagivTech.

Legal Disclaimer:

You understand that when using the Site you may be exposed to content from a variety of sources, and that SagivTech is not responsible for the accuracy, usefulness, safety or intellectual property rights of, or relating to, such content and that such content does not express SagivTech’s opinion or endorsement of any subject matter and should not be relied upon as such. SagivTech and its affiliates accept no responsibility for any consequences whatsoever arising from use of such content. You acknowledge that any use of the content is at your own risk.