or Did you know there is no need to copy data from CPU to GPU and back?!

Eri Rubin

SagivTech Ltd.

Real shared memory is here. Just to be on the same page, I mean sharing a pointer of allocated memory between CPU and GPU without paying dearly for it.

For the past few years all the major hardware vendors have created their own SOC (System on Chip) which means that all or most of the components of the system are on one piece of silicon. These systems are mostly targeted at low power, whether intended for the mobile market (Qualcomm, Imagination, Nvidia [Tegra]) or traditional CPU-based markets (Intel and AMD). One of the main potential advantages of such systems is the fact that there is only one physical RAM, i.e. every processor in the system is using the same RAM. This means that potentially no memory copies are needed to pass data between different processors on the system. While theory is always nice, in practice achieving this is much harder. First, I have to say,,I’m not a hardware engineer. My field is software – low-level, highly-optimized software which usually requires intimate knowledge of the hardware, but still….

Ok, so what is the problem? Well, as far as I understand it, one word can sum it up: caches. Today no processor accesses the RAM directly. “Why?” you might ask. Well, because RAM is SLOW, or more precisely, relatively slow. It can be two to three orders of magnitude slower than the processor, i.e. a processor can do 100-1000 addition operations in the time it takes it to load one float from RAM to a register. Now, I know this is an oversimplification of the system. There are many techniques used to alleviate this problem, and the critical one is caches. Cache memory is usually transparent to the user. The program asks the system to load data from a pointer in memory, the system then checks if it has it on the local cache which is much faster but much smaller. If the data resides on the local cache, it will give the processor the memory in the blink of an eye. If not, it will fetch a whole chunk of memory from RAM into it. Why a whole chunk? Well, it can do that much more efficiently, and it is assumed that if you want memory from a certain location in the RAM, there is a good chance that you will eventually want data from that same region. The same goes for writing out data: it is first written to a register, then to cache, and then the cache is synchronized with the RAM at some point.

And therein lies the problem: although the GPU and CPU share the same RAM, they have separate caches. So if we want them to share the same pointer in RAM, the hardware must make sure that the caches of the first processor are flushed to the RAM before the other processor starts working on it. The other processor’s cache must also be updated to load the new data from RAM again. Now this is something that just wasn’t there before. It is a new capability. But as it turns out, new generations of hardware are able to do just that, and very efficiently.

“Why do we need this pointer sharing?” you ask. Well, there are a few reasons. The first is that copying data back and forth can take a lot of time, sometimes more than just doing the computation on the same processor. For example, let’s say we are computing on the CPU and want to perform an operation that maps really nicely to the GPU. Just copying the data over to the GPU takes more time than performing the computation on the CPU. Another consideration is power and heat. This might surprise you but memory uses a lot of power relative to the processors, and accordingly produces more heat. Another reason is the size of RAM; SOC systems usually have a limited RAM, and if both processors use the same memory allocation, they can allocate bigger buffers.

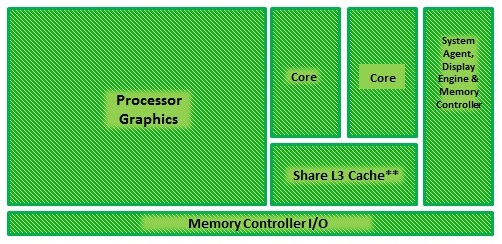

Fig 1. A diagram sample of the Intel platform

So now for the good news: we recently worked on an Intel SOC and an NVIDIA SOC, both of which have this feature working very nicely. On Intel we used Broadwell architecture and Skylake, both of which allowed pointer sharing without the need for any sync operations using the OpenCL 2.0 SVM fine-grain feature. As you can see in Fig 1 the CPU and GPU share the level 3 Cache.

On NVIDIA we used the TX1 dev board; here, using managed memory, we also got the desired outcome. Considering this is only the second generation for NVIDIA with a compute-capable GPU, this achievement is very impressive. At this point, I haven’t had the chance to test out AMD’s APU platforms, but they have been pushing the heterogeneous platform idea for quite a few years now, so hopefully they also support this feature.

Pointer sharing capability will greatly help the adaptation of using the GPU on such systems for offloading heavy computations, simplifying code development and eventually offering better performance.

Eri Rubin – VP of Research & Development

Eri Rubin is head of development, with 15 years of experience as software developer. Prior to joining SagivTech Eri was a Team Leader of CUDA Development at OptiTex. He worked as a Senior Graphics Developer for IDT-E Toronto, on animation movies and TV specials. Eri has a Master of Science in Parallel Computing, at the Hebrew University in Jerusalem. He received his Bachelor of Science in Computer Science & Life Science and also studied Animation for 3 years at Bezalel Arts Academy, Jerusalem.

Legal Disclaimer:

You understand that when using the Site you may be exposed to content from a variety of sources, and that SagivTech is not responsible for the accuracy, usefulness, safety or intellectual property rights of, or relating to, such content and that such content does not express SagivTech’s opinion or endorsement of any subject matter and should not be relied upon as such. SagivTech and its affiliates accept no responsibility for any consequences whatsoever arising from use of such content. You acknowledge that any use of the content is at your own risk.

Leave A Comment